Deep learning-based solution for smart contract vulnerabilities detection

Abstract 抽象

This paper aims to explore the application of deep learning in smart contract vulnerabilities detection. Smart contracts are an essential part of blockchain technology and are crucial for developing decentralized applications. However, smart contract vulnerabilities can cause financial losses and system crashes. Static analysis tools are frequently used to detect vulnerabilities in smart contracts, but they often result in false positives and false negatives because of their high reliance on predefined rules and lack of semantic analysis capabilities. Furthermore, these predefined rules quickly become obsolete and fail to adapt or generalize to new data. In contrast, deep learning methods do not require predefined detection rules and can learn the features of vulnerabilities during the training process. In this paper, we introduce a solution called Lightning Cat which is based on deep learning techniques. We train three deep learning models for detecting vulnerabilities in smart contract: Optimized-CodeBERT, Optimized-LSTM, and Optimized-CNN. Experimental results show that, in the Lightning Cat we propose, Optimized-CodeBERT model surpasses other methods, achieving an f1-score of 93.53%. To precisely extract vulnerability features, we acquire segments of vulnerable code functions to retain critical vulnerability features. Using the CodeBERT pre-training model for data preprocessing, we could capture the syntax and semantics of the code more accurately. To demonstrate the feasibility of our proposed solution, we evaluate its performance using the SolidiFI-benchmark dataset, which consists of 9369 vulnerable contracts injected with vulnerabilities from seven different types.

本文旨在探讨深度学习在智能合约漏洞检测中的应用。智能合约是区块链技术的重要组成部分,对于开发去中心化应用程序至关重要。但是,智能合约漏洞可能会导致财务损失和系统崩溃。静态分析工具经常用于检测智能合约中的漏洞,但由于它们高度依赖预定义的规则和缺乏语义分析能力,它们经常导致误报和漏报。此外,这些预定义的规则很快就会过时,无法适应或推广新数据。相比之下,深度学习方法不需要预定义的检测规则,并且可以在训练过程中学习漏洞的特征。在本文中,我们介绍了一种基于深度学习技术的解决方案,称为 Lightning Cat。我们训练了三种深度学习模型来检测智能合约中的漏洞:Optimized-CodeBERT、Optimized-LSTM 和 Optimized-CNN。实验结果表明,在我们提出的Lightning Cat模型中,Optimized-CodeBERT模型优于其他方法,f1得分为93.53%。为了精确提取漏洞特征,我们获取了易受攻击的代码函数片段,以保留关键的漏洞特征。使用 CodeBERT 预训练模型进行数据预处理,我们可以更准确地捕获代码的语法和语义。为了证明我们提出的解决方案的可行性,我们使用 SolidiFI 基准数据集评估其性能,该数据集由 9369 个易受攻击的合约组成,这些合约注入了来自七种不同类型的漏洞。

Introduction 介绍

Blockchain is a new application pattern based on technologies such as point-to-point transmission, encryption algorithm, consensus mechanism1 and distributed data storage2. Since the emergence of Bitcoin, the basic blockchain system has become widely known among professionals, resulting in the development of numerous blockchain applications. This is made possible by smart contracts3, which are automated programs running in a trusted environment provided by the blockchain4. If there are vulnerabilities in smart contracts publicly deployed on the blockchain, attackers can exploit these vulnerabilities to launch attacks. For example, on June 17, 2016, The DAO5, the largest crowdfunding project in the blockchain industry at the time, was attacked. The hacker exploited a reentrancy vulnerability and stole 3.6 million Ether worth around $60 million from The DAO’s asset pool, which directly led to the Ethereum blockchain splitting into ETH (Ethereum) and ETC (Ethereum Classic). On April 22, 2018, hackers targeted the BEC6 smart contract, which was based on the ERC-20 standard, using an integer overflow vulnerability. They transferred a significant amount of BEC tokens to two external accounts and dumped them, causing the token price to rapidly plummet to zero, disrupting the market. On February 15, 2020, the bZx protocol7, a set of smart contracts built on Ethereum, experienced its first attack. The attacker profited over three hundred thousand dollars, leading the project to temporarily suspend all functions except lending. On the 18th, the bZx protocol was targeted again, and the hacker exploited the manipulation of virtual asset prices through controlling the oracle, resulting in a profit of over 2,000 Ether. The above smart contract attack incidents demonstrate that due to the control of a substantial amount of cryptocurrency and financial assets, if smart contracts are targeted and attacked, it will result in unpredictable asset losses. Conducting vulnerability detection on smart contracts can help identify and fix potential vulnerabilities in contracts at an early stage, ensuring their security and protecting against asset theft or other security risks. Therefore, smart contract vulnerability detection is crucial for ensuring security, preventing financial losses, and maintaining user trust. It is an essential aspect of smart contract development and deployment processes.

区块链是基于点对点传输、加密算法、共识机制 1 和分布式数据存储等技术的新型应用模式 2 。自比特币出现以来,基本的区块链系统在专业人士中广为人知,从而开发了众多区块链应用程序。这是通过智能合约实现的,智能合约 3 是在区块链 4 提供的可信环境中运行的自动化程序。如果区块链上公开部署的智能合约存在漏洞,攻击者可以利用这些漏洞发起攻击。例如,2016 年 6 月 17 日,当时区块链行业最大的众筹项目 The DAO 5 遭到攻击。黑客利用重入漏洞,从 The DAO 的资产池中窃取了价值约 6000 万美元的 360 万以太币,这直接导致以太坊区块链分裂为 ETH(以太坊)和 ETC(以太坊经典)。2018 年 4 月 22 日,黑客利用整数溢出漏洞瞄准了基于 ERC-20 标准的 BEC 6 智能合约。他们将大量 BEC 代币转移到两个外部账户并抛售它们,导致代币价格迅速暴跌至零,扰乱了市场。2020 年 2 月 15 日,bZx 协议 7 ,一组建立在以太坊上的智能合约,经历了第一次攻击。攻击者获利超过30万美元,导致该项目暂时暂停了除贷款以外的所有功能。 18日,bZx协议再次成为攻击目标,黑客利用操纵虚拟资产价格通过控制预言机,获利超过2000个以太币。上述智能合约攻击事件表明,由于控制了大量的加密货币和金融资产,如果智能合约被针对和攻击,将导致不可预知的资产损失。对智能合约进行漏洞检测有助于及早识别和修复合约中的潜在漏洞,确保合约安全,防范资产被盗或其他安全风险。因此,智能合约漏洞检测对于确保安全、防止经济损失和维护用户信任至关重要。这是智能合约开发和部署过程的一个重要方面。

Current methods for detecting smart contract vulnerabilities include human review, static analysis8, fuzz testing9, and formal verification10. Well-known detection tools include Oyente11, Mythril12, Securify13, Slither14, and Smartcheck15. These tools automatically analyze contract code and cover various common types of smart contract security vulnerabilities, such as reentrancy, incorrect tx.origin authorization, timestamp dependency, and unhandled exceptions. However, they may produce false positives or false negatives because they highly depend on predefined detection rules and lack the ability to accurately comprehend complex code logic. Additionally, predefined rules become outdated quickly and cannot adapt or generalize to new data, which is rapidly evolving in the smart contract domain. In contrast, deep learning approaches learn from data and can continuously update themselves to stay relevant. In recent years, there has been significant research on using deep learning for smart contract vulnerability detection16,17,18,19. However, some methods tend to overlook critical vulnerability features in their data processing approaches, and certain models lack semantic analysis capabilities for vulnerability code, leading to potential false negatives20,21,22.

目前检测智能合约漏洞的方法包括人工审查、静态分析 8 、模糊测试 9 和形式验证 10 。著名的检测工具包括 Oyente 11 、 Mythril 12 、 Securify 13 、 Slither 14 和 Smartcheck 15 。这些工具可以自动分析合约代码,并覆盖各种常见的智能合约安全漏洞,如重入、tx.origin授权错误、时间戳依赖、未处理异常等。但是,它们可能会产生误报或漏报,因为它们高度依赖于预定义的检测规则,并且缺乏准确理解复杂代码逻辑的能力。此外,预定义的规则很快就会过时,无法适应或推广新数据,这在智能合约领域正在迅速发展。相比之下,深度学习方法从数据中学习,并可以不断自我更新以保持相关性。近年来,关于使用深度学习进行智能合约漏洞检测 16,17,18,19 的研究不断深入。然而,一些方法在其数据处理方法中往往会忽略关键的漏洞特征,并且某些模型缺乏对漏洞代码的语义分析能力,从而导致潜在的漏报 20,21,22 。

This paper proposes a deep learning-based solution called Lightning Cat. The solution includes three deep learning models, namely optimized CodeBERT, Optimized-LSTM, and Optimized-CNN, which are trained to detect vulnerabilities in smart contracts. To better identify vulnerability features, code snippets of functions containing vulnerabilities were obtained to preserve key features. The CodeBERT pre-training model23 was employed to preprocess the data, enhancing the semantic analysis capabilities24.

本文提出了一种基于深度学习的解决方案,称为 Lightning Cat。该解决方案包括三种深度学习模型,分别是优化的 CodeBERT、Optimized-LSTM 和 Optimized-CNN,它们经过训练可以检测智能合约中的漏洞。为了更好地识别漏洞特征,我们获取了包含漏洞的函数代码片段,以保留关键特征。采用CodeBERT预训练模型 23 对数据进行预处理,增强了语义分析能力 24 。

The main contributions of this paper can be summarized as follows:

- (1)

This paper designs a smart contracts vulnerabilities detection solution called Lightning Cat using deep learning methods. The solution optimizes three deep learning models.

- (2)

We introduce an effective data preprocessing method that captures the semantic features of smart contract vulnerabilities. During the data preprocessing stage, we retrieve code snippets of functions containing vulnerabilities to extract vulnerability features. We also use the CodeBERT pre-trained model for data preprocessing to enhance the model’s semantic analysis capabilities, with the primary goal of improving model performance.

- (3)

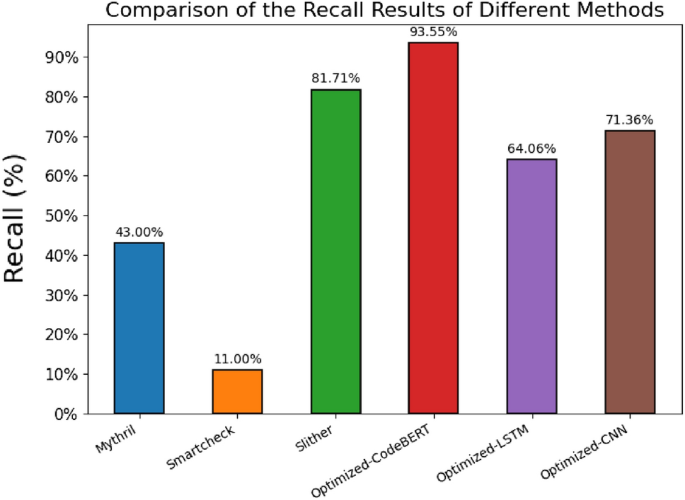

Based on the experimental evaluation results, the Lightning Cat proposed in this paper shows better detection performance than other vulnerability detection tools. The optimized CodeBERT model in Lightning Cat outperforms Optimized-LSTM and Optimized-CNN models, achieving a recall rate of 93.55%, which is 11.85% higher than Slither, a precision rate of 96.77%, and an f1-score of 93.53%.

In addition to smart contract vulnerability detection, the Lightning Cat can also be extended to other areas of code vulnerability detection25. Modern software systems are prone to various types of vulnerabilities, such as buffer overflow, null pointer dereference, and logic errors. For instance, buffer overflow code vulnerabilities are characterized by the use of unsafe string manipulation functions like strcpy and strcat without proper input boundary checks. Null pointer dereference vulnerabilities involve the misuse of dangling pointers by failing to set a pointer to NULL after freeing the associated memory. Logic errors manifest when incorrect logical operators are used in conditional statements, such as using = instead of ==. By learning and training on a large number of code samples, Lightning Cat can extract and comprehend different types of vulnerabilities. It also employs the CodeBERT pre-trained model for data preprocessing, making it better suited for identifying code vulnerabilities. As a result, it can detect various kinds of code vulnerabilities, thereby enhancing the security and dependability of the code.

Related work

In this section, we present related work on the detection of smart contract vulnerabilities, focusing primarily on static analysis techniques and deep learning methods.

Static analysis techniques

The Mythril12 security analysis method is designed to inspect bytecode executed in the Ethereum Virtual Machine (EVM). When defects are found in a program, it can help infer potential causes by analyzing input records. This assists in identifying existing vulnerabilities and reducing the likelihood of exploiting them. It utilizes taint analysis and symbolic execution techniques. However, when performing taint analysis, It faces limitations when crossing memory boundaries. This limitation becomes more severe when dealing with reference-style parameter invocations. Additionally, Mythril may encounter the state explosion problem when processing complex contracts. Furthermore, symbolic execution is a powerful general method for detecting vulnerabilities, but it may consider branches that may not be feasible in actual execution, leading to false positives.

Slither14 is a static code analysis tool used for detecting security vulnerabilities and potential issues in Solidity smart contracts. It integrates numerous detectors capable of identifying different types of vulnerabilities. Compared to Mythril, Slither is much more efficient and performs fast detection. However, Slither lacks formal semantic analysis, which limits its ability to perform more detailed security analysis and accurately determine low-level information such as gas calculations.

SmartCheck15 uses static analysis techniques to detect common security vulnerabilities and coding issues in smart contracts. It offers numerous rule sets to identify different types of vulnerabilities and improve contract security. However, due to its heavy reliance on logical rules for vulnerability detection, it may generate false positives and false negatives. Furthermore, it may fail to detect severe programming errors, leading to overlooked vulnerabilities or incorrect reporting.

Pre-training model

The analysis of smart contracts using deep learning methods in our study is essentially a Natural Language Processing (NLP)26 task. In general NLP tasks, the input texts are required to be represented as vectors which can be further fed into the deep learning models for downstream tasks. With the development of pre-trained models, the text representation in NLP has presented a significant improvement. Pre-trained models can effectively capture the semantic information, textual structure and grammar rules as well as provide valuable vector embeddings via training on large-scale datasets. They are relatively crucial in NLP. In this research, we employ a pre-trained model CodeBERT in transforming smart contracts code based on text into vector embeddings. A distinctive feature of CodeBERT is that it has been pre-trained on a vast amount of code and its associated natural language comments, which enables it to understand the structure and semantics of the code.

CodeBERT, as a pre-trained model, presents state-of-the-art performances on both natural language processing and programming language processing tasks as downstream tasks. The characteristic of CodeBERT is that it has been pre-trained on a vast amount of code and its associated natural language comments, enabling it to understand the structure and semantics of the code. Typical pre-trained models like Seq2Seq, Transformer, and RoBERTa generally perform well in PL-NL processing tasks. Furthermore, CodeBERT has a gain of around 3.5, 2, and 1 BLEU27 score over Seq2Seq28, Transformer28, and RoBERTa29 models in code-to-documentation generation tasks23, which means CodeBERT possesses better programming language processing ability.

Deep learning for smart contract vulnerabilities detection

From related work, it has been observed that some tools based on static analysis techniques suffer from false positives and false negatives, mainly due to their reliance on predefined rules. These tools lack the ability to perform syntax and semantic analysis, and the predefined rules can become outdated quickly and cannot adapt or generalize to new data. In contrast, deep learning methods do not require predefined detection rules and can learn vulnerability features during the training process.

从相关工作中可以看出,一些基于静态分析技术的工具存在误报和漏报,这主要是因为它们依赖于预定义的规则。这些工具缺乏执行语法和语义分析的能力,预定义的规则可能会很快过时,无法适应或泛化新数据。相比之下,深度学习方法不需要预定义的检测规则,并且可以在训练过程中学习漏洞特征。

We have found that some literature has utilized deep learning for smart contract vulnerabilities detection. Zhipeng Gao30 and his team developed a deep learning tool that analyzes Solidity smart contracts on the Ethereum blockchain, helping developers detect repetitive patterns and known bugs. Zhang et al.18 proposed a Novel Smart Contract Vulnerability Detection Method Based on Information Graph and Ensemble Learning. In the same year, they also introduced the Serial-Parallel Convolutional Bidirectional Gated Recurrent Network Model incorporating Ensemble Classifiers (SPCBIG-EC) for enhanced smart contract vulnerability detection in IoT devices19.

我们发现一些文献利用深度学习进行智能合约漏洞检测。Zhipeng Gao 30 和他的团队开发了一种深度学习工具,用于分析以太坊区块链上的Solidity智能合约,帮助开发人员检测重复模式和已知错误。Zhang等人 18 提出了一种基于信息图和集成学习的新型智能合约漏洞检测方法。同年,他们还推出了包含集成分类器(SPCBIG-EC)的串行-并行卷积双向门控循环网络模型,用于增强物联网设备 19 中的智能合约漏洞检测。

Huang et al.20 proposed a vulnerabilities detection model for smart contracts using a convolutional neural network. This network converts the binary representation of vulnerable code into RGB images. However, converting binary files to image format makes it challenging to preserve the syntax and semantic information of the code. Although this approach improves accuracy to some extent31, it suffers from a high false negative rate.

Huang等人 20 提出了一种使用卷积神经网络的智能合约漏洞检测模型。该网络将易受攻击代码的二进制表示转换为 RGB 图像。但是,将二进制文件转换为图像格式使得保留代码的语法和语义信息具有挑战性。虽然这种方法在一定程度上提高了准确性 31 ,但它的假阴性率很高。

Liao et al.21 used N-gram language modeling and tf-idf feature vectors to characterize smart contract source code. They trained traditional machine learning models to verify 13 types of vulnerabilities and employed a gray-box fuzz testing mechanism for real-time transaction validation. However, this method treats certain critical opcodes as stop words during the representation process, which can result in false negatives and missed detections.

Liao 等人 21 使用 N-gram 语言建模和 tf-idf 特征向量来表征智能合约源代码。他们训练了传统的机器学习模型来验证 13 种类型的漏洞,并采用灰盒模糊测试机制进行实时交易验证。但是,此方法在表示过程中将某些关键操作码视为停用词,这可能会导致漏报和漏检。

Yu et al.22 developed the first systematic and modular framework for smart contract vulnerability detection based on deep learning. They introduced the concept of Vulnerability Candidate Slice (VCS), which focuses on analyzing the dependencies between diverse data and control program elements. Experimental results showed a significant improvement of 25.76% in the F1 score using this approach. However, the performance improvement is not substantial for vulnerability types with limited data and control flow dependencies.

Yu等人 22 开发了第一个基于深度学习的智能合约漏洞检测系统化、模块化框架。他们引入了漏洞候选切片 (VCS) 的概念,该切片侧重于分析不同数据和控制程序元素之间的依赖关系。实验结果表明,使用这种方法,F1评分显著提高了25.76%。但是,对于数据和控制流依赖关系有限的漏洞类型,性能改进并不显著。

These works provide various methods for data preprocessing aimed at enabling the deep learning models to extract vulnerability features more effectively. However, some methods may result in the deletion of important keywords or the ignoring of critical vulnerability features during data processing32. Additionally, some of the models used may have an insufficient understanding of the semantic characteristics of vulnerability code programs33, which can result in false negatives. To address these issues, we utilized the CodeBERT pre-training model for data preprocessing. CodeBERT is a Transformer-based pre-training model designed specifically for learning and processing source code. It demonstrates stronger semantic analysis abilities, providing significant advantages in smart contract vulnerability detection. Additionally, we introduced the concept of critical vulnerability code segments and removed code unrelated to vulnerabilities from the training samples. We retained only the function code of critical vulnerabilities for learning. This strategy eliminates training noise introduced by redundant code, reduces model complexity, and improves model performance. During the model training stage, we utilized three models – optimized CodeBERT, Optimized-LSTM, and Optimized-CNN – to capture vulnerability features more effectively.

这些工作为数据预处理提供了多种方法,旨在使深度学习模型能够更有效地提取漏洞特征。但是,某些方法可能会导致在数据处理 32 过程中删除重要关键字或忽略关键漏洞特征。此外,使用的某些模型可能对漏洞代码程序 33 的语义特征理解不足,这可能导致误报。为了解决这些问题,我们利用CodeBERT预训练模型进行数据预处理。CodeBERT 是一个基于 Transformer 的预训练模型,专为学习和处理源代码而设计。它表现出更强的语义分析能力,在智能合约漏洞检测方面具有显著优势。此外,我们还引入了关键漏洞代码段的概念,并从训练样本中删除了与漏洞无关的代码。我们只保留了关键漏洞的功能代码进行学习。该策略消除了冗余代码引入的训练噪声,降低了模型复杂性,并提高了模型性能。在模型训练阶段,我们利用优化的 CodeBERT、Optimized-LSTM 和 Optimized-CNN 三个模型来更有效地捕获漏洞特征。

Using the aforementioned methods, our proposed Lightning Cat tool extracts critical features from vulnerability code and has strong semantic analysis capabilities, which significantly improves model performance.

Methodology

CodeBERT model has the state-of-the-art performances in tasks related to programming language processing23. It features capturing semantic connections between natural language and programming language. According to Yuan et al.34, CodeBERT can achieve 61% of accuracy in software vulnerabilities discovery which is generally higher than mainstream models Word2Vec35, FastText36 and GloVe37 (46%, 41% and 29% respectively). In our research, smart contracts are based on programming language Solidity. Therefore, we optimize the CodeBERT model and employ it in our study. CNN is a commonly used and typical deep learning model with an excellent generality in processing images and texts. LSTM is also a deep learning model featuring in processing long texts and it can effectively learn time sequence in texts which CNN is not adaptive to do. Both CNN and LSTM have achieved significantly high accuracy (0.958 and 0.959 respectively) in source code vulnerabilities detection, according to Xu et al.38. We attempt to employ CNN and LSTM models as comparisons with CodeBERT model and further analyze the performances of them in our tasks.

CodeBERT模型在与编程语言处理 23 相关的任务中具有最先进的性能。它的特点是捕捉自然语言和编程语言之间的语义联系。根据Yuan等人 34 的研究,CodeBERT在软件漏洞发现方面可以达到61%的准确率,总体上高于主流模型Word2Vec 35 、FastText 36 和GloVe 37 (分别为46%、41%和29%)。在我们的研究中,智能合约基于编程语言 Solidity。因此,我们优化了CodeBERT模型,并将其用于我们的研究。CNN是一种常用的典型深度学习模型,在处理图像和文本方面具有出色的通用性。LSTM 也是一种深度学习模型,具有处理长文本的功能,它可以有效地学习文本中的时间序列,这是 CNN 无法做到的。据 Xu 等人称,CNN 和 LSTM 在源代码漏洞检测方面都达到了非常高的准确率(分别为 0.958 和 0.959)。 38 我们尝试使用 CNN 和 LSTM 模型与 CodeBERT 模型进行比较,并进一步分析它们在我们任务中的性能。

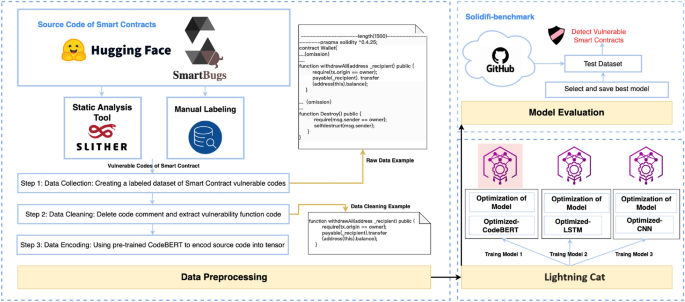

Figure 1 illustrates the complete process of developing a vulnerability detection model called Lightning Cat for smart contracts, which consists of three stages. The first stage involves building and preprocessing the labeled dataset of vulnerable Solidity code. In the second stage, training three models (Optimized-CodeBERT, Optimized-LSTM, and Optimized-CNN) and comparing their performance to determine the best one. Finally, in the third stage, the selected model is evaluated using the Sodifi-benchmark dataset to assess its effectiveness in detecting vulnerabilities.

图 1 展示了为智能合约开发名为 Lightning Cat 的漏洞检测模型的完整过程,该模型由三个阶段组成。第一阶段涉及构建和预处理易受攻击的 Solidity 代码的标记数据集。在第二阶段,训练三个模型(Optimized-CodeBERT、Optimized-LSTM 和 Optimized-CNN)并比较它们的性能以确定最佳模型。最后,在第三阶段,使用Sodifi-benchmark数据集对所选模型进行评估,以评估其检测漏洞的有效性。

Data preprocessing 数据预处理

During the data preprocessing phase, we collect three datasets and subsequently perform data cleaning. Finally, we employ the CodeBERT model to encode the data.

在数据预处理阶段,我们收集了三个数据集,然后进行数据清洗。最后,我们采用CodeBERT模型对数据进行编码。

Data collection 数据采集

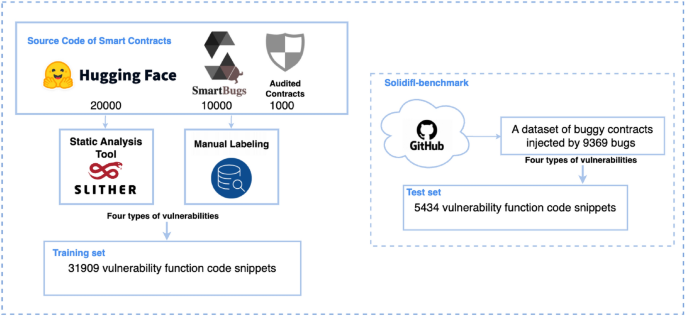

Our primary training dataset comprises three main sources: 10,000 contracts from the Slither Audited Smart Contracts Dataset39, 20,000 contracts from smartbugs-wild40, and 1,000 typical smart contracts with vulnerabilities identified through expert audits, overall 31,000 contracts. To effectively compare results with other auditing tools, we choose to use the SolidiFI benchmark dataset41 as our test set, a dataset containing contracts containing 9,369 bugs.

我们的主要训练数据集包括三个主要来源:来自 Slither 审计智能合约数据集的 10,000 份合约,来自 smartbugs-wild 40 的 20,000 份合约,以及 1,000 份通过专家审计发现的漏洞的典型智能合约,总共 39 31,000 份合约。为了有效地将结果与其他审计工具进行比较,我们选择使用 SolidiFI 基准数据集 41 作为我们的测试集,该数据集包含包含 9,369 个错误的合约。

Dataset processing

Within our test set SolidiFI-benchmark, there are three static detection tools which are Slither, Mythril, and Smatcheck as well as all identified four common vulnerability types which are Re-entrancy, Timestamp-Dependency, Unhandled-Exception, and tx.origin. To ensure the completeness and fairness of the results, our proposed Lightning Cat model primarily focused on these four types of vulnerabilities for comparison. Table 1 displays the mapping between the four types of vulnerabilities and the three auditing tools.

Considering that a complete contract might consist of multiple Solidity files and a single Solidity file might contain several vulnerable code snippets, we utilized the Slither tool to extract 30,000 functions containing these four types of vulnerabilities from the data sources39,40. Additionally, we manually annotate the problematic code snippets within the contracts audited by experts, overall 1,909 snippets. The training set comprises 31,909 code snippets. For the test set, we extract 5,434 code snippets related to these four vulnerabilities from the SolidiFI-benchmark dataset. The processing procedures for the training and test sets can be seen in Fig. 2.

Data cleaning 数据清理

The length of a smart contract typically depends on its functionality and complexity. Some complex contracts can exceed several thousand tokens. However, handling long text has been a long-standing challenge in deep learning42. Transformer-based models can only handle a maximum of 512 tokens. Therefore, we attempted two methods to address the issue of text length exceeding 510 tokens.

智能合约的长度通常取决于其功能和复杂性。一些复杂的合约可以超过几千个代币。然而,处理长文本一直是深度学习 42 中长期存在的挑战。基于 Transformer 的模型最多只能处理 512 个令牌。因此,我们尝试了两种方法来解决文本长度超过 510 个标记的问题。

Direct splitting 直接拆分

The data is split into chunks of 510 tokens each, and all the chunks are assigned the same label. For example, if we have a group of Re-entrancy vulnerability code with a length of 2000 tokens, it would be split into four chunks, each containing 510 tokens. If there are chunks with fewer than 510 tokens, we pad them with zeros. However, the training results show that the model’s loss does not converge. We speculate that this is due to the introduction of noise from unrelated chunks, which negatively affects the model’s generalization capability.

数据被拆分为每个块 510 个令牌,并且所有块都分配了相同的标签。例如,如果我们有一组长度为 2000 个令牌的 Re-entrancy 漏洞代码,它将被拆分为四个块,每个块包含 510 个令牌。如果有少于 510 个令牌的块,我们用零填充它们。然而,训练结果表明,模型的损失没有收敛。我们推测这是由于从不相关的块中引入了噪声,这对模型的泛化能力产生了负面影响。

Vulnerability function code extraction

漏洞函数代码提取

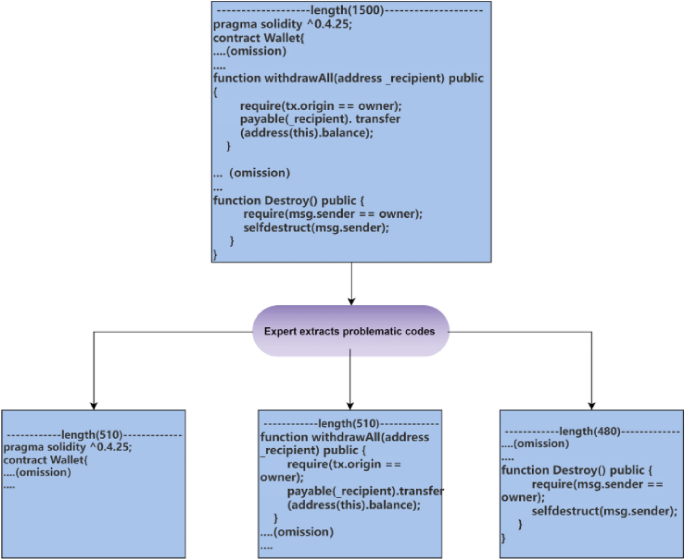

Audit experts extracted the function code of vulnerabilities from smart contracts and assigned corresponding vulnerability labels. If the extracted code exceeds 510 tokens, it is truncated, and if the code falls short of 510 tokens, it is padded with zeros. This approach ensures consistent input data length, addresses the length limitation of Transformer models, and preserves the characteristics of the vulnerabilities.

审计专家从智能合约中提取漏洞的功能代码,并分配相应的漏洞标签。如果提取的代码超过 510 个标记,则会被截断,如果代码少于 510 个标记,则用零填充。该方法确保了输入数据长度的一致性,解决了Transformer模型的长度限制,并保留了漏洞的特征。

After comparing the two methods, we observed that training on vulnerability-based function code helped the model’s loss function converge better. Therefore, we chose to use this data processing method in subsequent experiments. Additionally, we removed unrelated characters such as comments and newline characters from the functions to enhance the model’s performance. As shown in Fig. 3, we only extracted the function parts containing the vulnerability code, reducing the length of the training dataset while maintaining the vulnerability characteristics. This approach not only improves the model’s accuracy, but also enhances its generalization ability.

在比较了这两种方法后,我们观察到基于漏洞的函数代码训练有助于模型的损失函数更好地收敛。因此,我们在后续的实验中选择使用这种数据处理方法。此外,我们还从函数中删除了不相关的字符,例如注释和换行符,以增强模型的性能。如图 3 所示,我们只提取了包含漏洞代码的功能部分,在保持漏洞特征的同时减少了训练数据集的长度。这种方法不仅提高了模型的精度,而且增强了模型的泛化能力。

Extraction of Vulnerable Function Code (We partition the smart contract as a whole and extract only the functions where the vulnerabilities are present. In the provided image, we focus on the ”withdrawALL” function, which serves as our training dataset. If a contract contains multiple vulnerabilities, we extract multiple corresponding functions).

Data encoding 数据编码

CodeBERT is a pretraining model based on the Transformer architecture, specifically designed for learning and processing source code. By undergoing pretraining on extensive code corpora, CodeBERT acquires knowledge of the syntax and semantic relationships inherent in source code, as well as the interactive dynamics between different code segments.

CodeBERT 是基于 Transformer 架构的预训练模型,专为学习和处理源代码而设计。通过对广泛的代码语料库进行预训练,CodeBERT获得了源代码中固有的语法和语义关系的知识,以及不同代码段之间的交互动态。

During the data preprocessing stage, CodeBERT is employed due to its strong representation ability. The source code undergoes tokenization, where it is segmented into tokens that represent semantic units. Subsequently, the tokenized sequence is encoded into numerical representations, with each token mapped to a unique integer ID, forming the input token ID sequence. To meet the model’s input requirements, padding and truncation operations are applied, ensuring a fixed sequence length. Additionally, an attention mask is generated to distinguish relevant positions from padded positions containing invalid information. Thus, the processed data includes input IDs and attention masks, transforming the source code text into a numericalized format compatible with the model while indicating the relevant information through the attention mask.

在数据预处理阶段,由于CodeBERT具有很强的表示能力,因此被采用。源代码经过标记化,其中它被分割为表示语义单元的标记。随后,将标记化序列编码为数字表示,每个标记映射到唯一的整数 ID,形成输入令牌 ID 序列。为了满足模型的输入要求,应用了填充和截断操作,以确保固定的序列长度。此外,还会生成一个注意力掩码,以将相关位置与包含无效信息的填充位置区分开来。因此,处理后的数据包括输入ID和注意力掩码,将源代码文本转换为与模型兼容的数字化格式,同时通过注意力掩码指示相关信息。

For Optimized-LSTM and Optimized-CNN models, direct processing of input IDs and masks is not feasible. Therefore, CodeBERT is utilized to further process the data and convert it into tensor representations of embedding vectors. The input IDs and attention masks obtained from the preprocessing steps are passed to the CodeBERT model to obtain meaningful representations of the source code data. These embedding vectors can be used as inputs for Optimized-LSTM and Optimized-CNN models, facilitating their integration for subsequent vulnerability detection.

对于 Optimized-LSTM 和 Optimized-CNN 模型,直接处理输入 ID 和掩码是不可行的。因此,CodeBERT用于进一步处理数据并将其转换为嵌入向量的张量表示。从预处理步骤中获得的输入 ID 和注意力掩码将传递给 CodeBERT 模型,以获得源代码数据的有意义的表示形式。这些嵌入向量可以用作 Optimized-LSTM 和 Optimized-CNN 模型的输入,便于将它们集成以进行后续的漏洞检测。

Models 模型

In the current stage, our approach involves the utilization of three machine learning models: Optimized-CodeBERT, Optimized-LSTM, and Optimized-CNN. The CodeBERT model is specifically fine-tuned to enhance its compatibility with the target task by accepting preprocessed input IDs and attention masks as input. However, in the case of Optimized-LSTM and Optimized-CNN models, we do not conduct any fine-tuning on the CodeBERT model for data preprocessing.

在当前阶段,我们的方法涉及使用三种机器学习模型:Optimized-CodeBERT、Optimized-LSTM 和 Optimized-CNN。CodeBERT 模型经过专门微调,通过接受预处理的输入 ID 和注意力掩码作为输入来增强其与目标任务的兼容性。但是,对于 Optimized-LSTM 和 Optimized-CNN 模型,我们不会对 CodeBERT 模型进行任何微调以进行数据预处理。

Model 1: optimized-CodeBERT

模型 1:optimized-CodeBERT

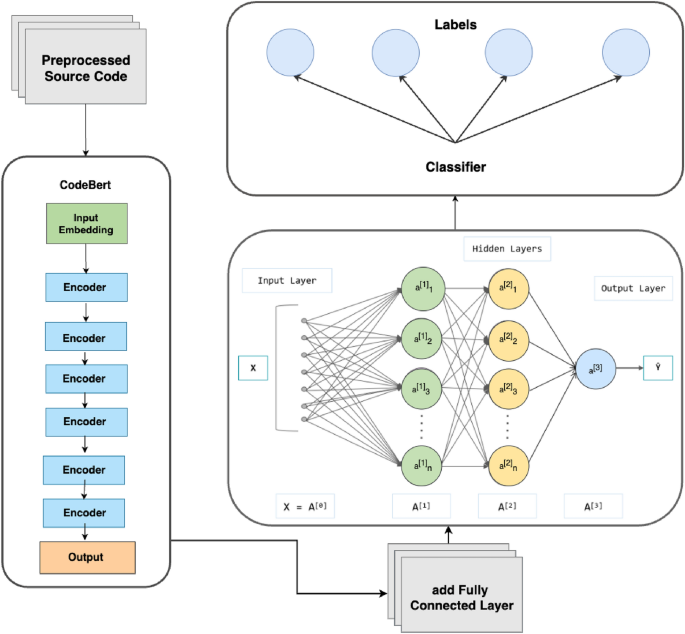

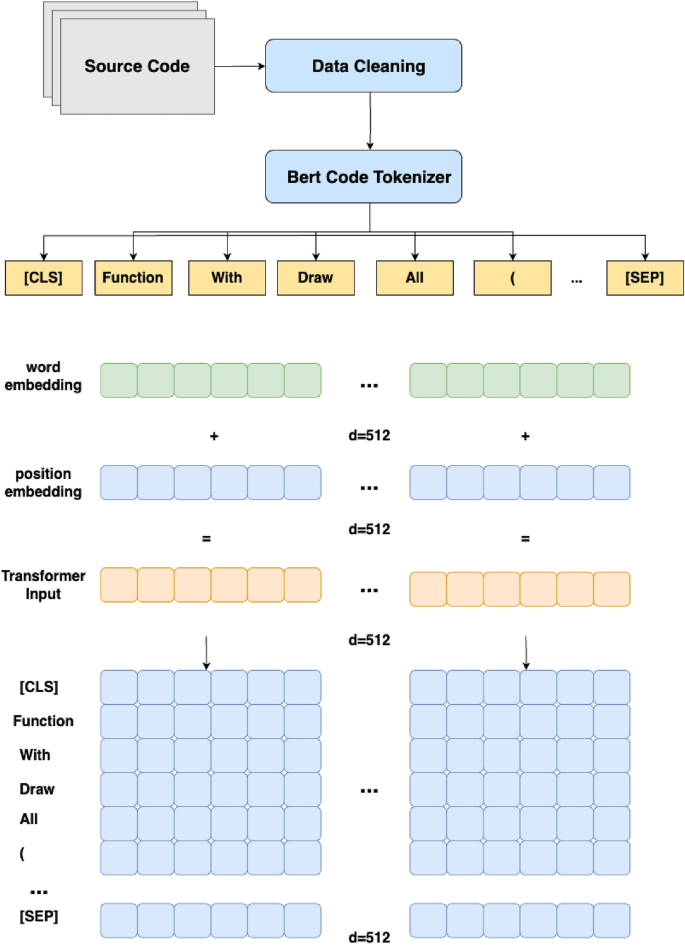

CodeBERT is a specialized application that utilizes the Transformer model for learning code representations in code-related tasks. In this paper, we focus on fine-tuning the CodeBERT model to specifically address the needs of smart contract vulnerability detection. The CodeBERT model is built upon the Transformer architecture, which comprises multiple encoder layers. Prior to entering the encoder layers of CodeBERT, the input data undergoes an embedding process. Following the encoding stage of CodeBERT, fully connected layers are added for classification purposes. The model architecture of our CodeBERT implementation is depicted in Fig. 4.

CodeBERT 是一个专门的应用程序,它利用 Transformer 模型来学习代码相关任务中的代码表示。在本文中,我们专注于微调CodeBERT模型,以专门解决智能合约漏洞检测的需求。CodeBERT 模型建立在 Transformer 架构之上,该架构由多个编码器层组成。在进入CodeBERT的编码器层之前,输入数据会经历一个嵌入过程。在CodeBERT的编码阶段之后,将添加全连接层以进行分类。我们的CodeBERT实现的模型架构如图4所示。

Word Embedding and Position Encoding In the data preprocessing stage, we have utilized a specialized CodeBERT tokenizer to process each word into the input information. In this model tranining stage, the tokenizer employs embedding methods, which are used to convert text or symbol data into vector representations. This processing transforms each word into a 512-dimensional word embedding. In addition, we introduce position embedding, which is a technique introduced to assist the model in understanding the positional information within the sequence. It associates each position with a specific vector representation to express the relative positions of tokens in the sequence. For a given position i and dimension k, the Position Encoding PE(�,�) is computed as follows:

词嵌入和位置编码 在数据预处理阶段,我们利用专门的CodeBERT分词器将每个词处理为输入信息。在此模型转换阶段,分词器采用嵌入方法,用于将文本或符号数据转换为向量表示。此处理将每个单词转换为 512 维单词嵌入。此外,我们还引入了位置嵌入,这是一种帮助模型理解序列中的位置信息的技术。它将每个位置与特定的向量表示相关联,以表示序列中标记的相对位置。对于给定的位置 i 和维度 k,位置编码 \(\text {PE}(i, k)\) 的计算公式如下:

Here, d represents the dimension of the input sequence. The formula utilizes sine and cosine functions to generate position vectors, injecting positional information into the embeddings. The exponential term �100002�/� controls the rate of change of the position encoding, ensuring differentiation among positions. By adding the Position Encoding to the Word Embedding, positional information is integrated into the embedded representation of the input sequence. This enables CodeBERT to better comprehend the semantics and contextual relationships of different positions in the code. The processing steps are illustrated in Fig. 5.

这里,d 表示输入序列的维度。该公式利用正弦和余弦函数生成位置向量,将位置信息注入嵌入中。指数项\(\frac{i}{10000^{2k/d}}\)控制位置编码的变化率,确保位置之间的差异。通过将位置编码添加到字嵌入中,位置信息被集成到输入序列的嵌入式表示中。这使得CodeBERT能够更好地理解代码中不同位置的语义和上下文关系。处理步骤如图5所示。

Encoder layers The CodeBERT model performs deep representation learning by stacking multiple encoder layers. Each encoder layer comprises two sub-layers: multi-head self-attention and feed-forward neural network. The self-attention mechanism helps encode the relationships and dependencies between different positions in the input sequence. The feed-forward neural network is responsible for independently transforming and mapping the features at each position.

The multi-head self-attention mechanism calculates attention weights, denoted as ���, for each position i in the input code sequence. The attention weights are computed using the following equation:

多头自注意力机制为输入代码序列中的每个位置 i 计算注意力权重,表示为 \(w_{ij}\)。注意力权重使用以下公式计算:

Here, �� represents the query at position i, �� denotes the key at position j, and d is the dimension of the queries and keys. The output of the self-attention mechanism at position i, denoted as ��, is obtained by multiplying the attention weights ��� with their corresponding values �� and summing them up:

这里,\(q_i\) 表示位置 i 处的查询,\(k_j\) 表示位置 j 处的键,d 表示查询和键的维度。位置 i 处的自注意力机制的输出,表示为 \(o_i\),是通过将注意力权重 \(w_{ij}\) 乘以其对应的值 \(v_j\) 并将它们相加得到的:

where n is the length of the input sequence.

其中 n 是输入序列的长度。

Each encoder layer also contains a feed-forward neural network sub-layer, which processes the output of the self-attention sub-layer using the following equation:

每个编码器层还包含一个前馈神经网络子层,该子层使用以下公式处理自注意力子层的输出:

Here, x represents the output of the self-attention sub-layer, and �1,�1 and �2,�2 are the parameters of the feed-forward neural network.

这里,x代表自注意力子层的输出,\(W_1, b_1\) 和 \(W_2, b_2\) 是前馈神经网络的参数。

Fully connected layers To output the classification labels, we added fully connected layers. Firstly, we added a new linear layer with 100 features on top of the existing linear layer. To avoid the limited capacity of a single linear layer, we utilized the ReLU activation function. Additionally, to prevent overfitting, we introduced a dropout layer with a dropout rate of 0.1 after the activation layer. Lastly, we used a linear layer with four features for the output. During the fine-tuning process, the parameters of these new layers were updated.

全连接层 为了输出分类标签,我们添加了全连接层。首先,我们在现有线性图层的基础上添加了一个具有 100 个特征的新线性图层。为了避免单个线性层的容量有限,我们使用了 ReLU 激活函数。此外,为了防止过拟合,我们在激活层之后引入了一个丢失率为 0.1 的丢弃层。最后,我们使用具有四个特征的线性层作为输出。在微调过程中,这些新层的参数被更新。

Model 2: optimized-LSTM 模型 2:优化的 LSTM

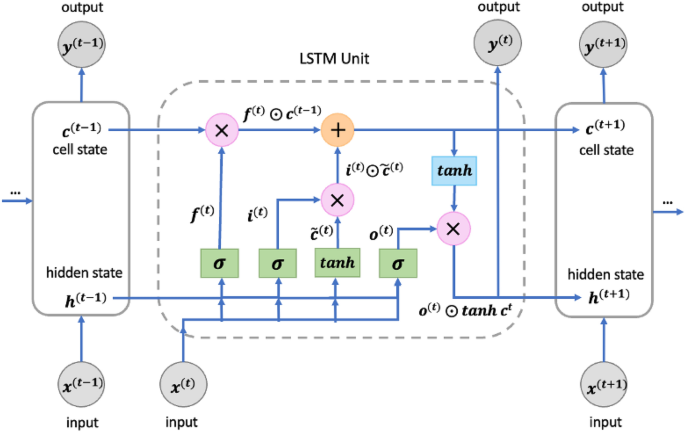

The Optimized-LSTM model is specifically designed for processing sequential data, capable of capturing temporal dependencies and syntactic-semantic information43. For the task of smart contract vulnerability detection, our constructed Optimized-LSTM model provides a serialization-based representation of Solidity source code, taking into account the order of statements and function calls. The Optimized-LSTM model captures the syntax, semantics, and dependencies within the code, enabling an understanding of the logical structure and execution flow. Compared to traditional RNNs, the Optimized-LSTM model we constructed addresses the issue of vanishing or exploding gradients when handling long sequences44. This is accomplished through the key mechanism of gated cells, which enable selective retention or forgetting of previous states. The model consists of shared components across time steps, including the cell, input gate, output gate, and forget gate. In the Optimized-LSTM model, we have defined an LSTM layer and a fully connected layer, with the LSTM layer being the core component. Within the LSTM layer, the input �(�), the output from the previous time step ℎ(�−1), and the cell state from the previous time step �(�−1) are fed into an LSTM unit. This unit contains a forget gate �(�), an input gate �(�), and an output gate �(�), as shown in Fig. 6.

Optimized-LSTM 模型专为处理顺序数据而设计,能够捕获时间依赖关系和句法语义信息 43 。对于智能合约漏洞检测任务,我们构建的 Optimized-LSTM 模型提供了基于序列化的 Solidity 源代码表示,并考虑了语句和函数调用的顺序。Optimized-LSTM 模型捕获代码中的语法、语义和依赖关系,从而能够理解逻辑结构和执行流程。与传统的RNN相比,我们构建的Optimized-LSTM模型解决了在处理长序列时梯度消失或爆炸的问题 44 。这是通过门控细胞的关键机制实现的,该机制能够选择性地保留或忘记以前的状态。该模型由跨时间步长的共享组件组成,包括单元、输入门、输出门和遗忘门。在 Optimized-LSTM 模型中,我们定义了一个 LSTM 层和一个全连接层,其中 LSTM 层是核心组件。在 LSTM 层中,输入 \(x^{(t)}\)、前一个时间步长 \(h^{(t-1)}\) 的输出和前一个时间步长 \(c^{(t-1)}\) 的单元状态被馈送到 LSTM 单元中。该单元包含一个遗忘门\(f^{(t)}\)、一个输入门\(i^{(t)}\)和一个输出门\(o^{(t)}\),如图6所示。

In the model, we utilize a bidirectional Optimized-LSTM, where the forward Optimized-LSTM and backward Optimized-LSTM are independent and concatenated at the final step. This allows for better capture of long-term dependencies and local correlations within the sequence. During the forward propagation of the model, the input x is first passed through the Optimized-LSTM layer to obtain the output h and the final cell state c. Since the lengths of the data instances may vary, we calculate the average output by averaging the outputs at each time step in h. Then, the average output is fed into a fully connected layer to obtain the final prediction output y. We used the cross-entropy loss function L for training, which is defined as:

Here, N represents the number of classes, �(�,�) denotes the probability of the jth class in the true label of sample i, and �^(�,�) represents the probability of sample i being predicted as the jth class by the model.

Model 3: optimized-CNN

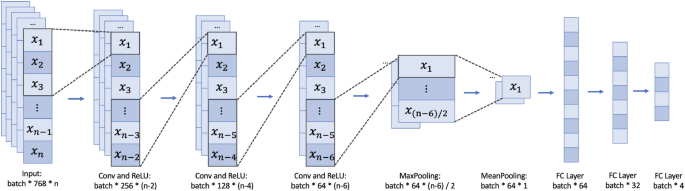

The Convolutional Neural Network (CNN) is a feedforward neural network that exhibits remarkable advantages when processing two-dimensional data, such as the two-dimensional structures represented by code45. In our model design, we transform the code token sequence into a matrix, and CNN efficiently extracts local features of the code and captures the spatial structure, effectively capturing the syntax structure, relationships between code blocks, and important patterns within the code.

The Optimized-CNN primarily consists of convolutional layers, pooling layers, fully connected layers, and activation functions. Its core idea is to extract features from input data through convolution operations, reduce the dimensionality of feature maps through pooling layers, and ultimately perform classification or regression tasks through fully connected layers46. The key module of the Optimized-CNN is the convolutional layer, which is computed as follows:

Here, �(�,�,�) represents the element value of the input data at the i-th row, j-th column, and k-th channel, �(�,�,�) represents the weight value of the k-th channel, l-th row, and m-th column of the convolutional kernel, and b represents the bias term. σ denotes the activation function, and in this case, we use the Rectified Linear Unit (ReLU).

The output of the convolutional layer is passed to the pooling layer for further processing. The commonly used pooling methods are Max Pooling and Average Pooling. In this case, we employ Max Pooling, and the calculation formula is as follows:

Pooling operations can reduce the dimensionality of feature maps, model parameters, and to some extent alleviate overfitting issues. Finally, a fully connected layer is used to compute the model, which is expressed as:

Here, x represents the output of the previous layer, W and b denote the weights and bias terms, and σ is the activation function. By stacking multiple convolutional layers, pooling layers, and fully connected layers, we construct a Optimized-CNN model as shown in Fig. 7, which has powerful feature extraction and classification capabilities for smart contract classification.

Experiments

To ensure a fair evaluation of different methods, we trained and tested them in identical environments. All experiments were performed on a computer featuring an Intel Xeon(R) Silver 4210R CPU clocked at 2.4GHz, dual RTX A5000 GPUs, and 128GB of RAM, running on the Windows operating system, utilizing the PyCharm software, the PyTorch framework, and the Python programming language.

Parameter settings

Then, we do the tuning process with respect to each hyperparameter. For Optimized-CodeBERT model, we employed the AdamW optimizer47 and conducted a grid search to find the optimal settings for hyperparameters. The hyperparameters and their corresponding search ranges were as follows: learning rate: (3e-5, 1e-4, 3e-4), batch size: (32, 64, 128, 256), dropout rate: (0.1, 0.2, 0.3, 0.4, 0.5), L2 regularization: (1e-6, 1e-5, 1e-4, 1e-3, 1e-2, 1e-1), learning rate decay gamma: (0.98, 0.99), number of fully connected layers: (1, 2, 3), and number of epochs: (10, 20, 30, 40, 50, 60). The cross-entropy loss was calculated using the BCEWithLogitsLoss method. The best parameter settings corresponding to the final results are shown in Table 2.

For Optimized-LSTM Model, the best parameter settings are shown in Table 3.

For Optimized-CNN Model, the best parameter settings are shown in Table 4.

Metrics

To evaluate our methods, we use various performance metrics, including accuracy, F1 score, recall, and precision. Accuracy is indeed the ratio of correctly predicted instances (both true positives and true negatives) to the total number of instances. It provides a general measure of overall correctness.

F1 score is another important metric that combines both precision and recall. It considers the trade-off between them and provides a balance between the two. F1 score is particularly useful when the dataset is imbalanced or when both precision and recall are important.

Precision, also known as positive predictive value, measures the proportion of correctly predicted positive instances (true positives) out of all instances predicted as positive. It focuses on the accuracy of positive predictions.

Recall, also known as sensitivity or true positive rate, calculates the proportion of correctly predicted positive instances (true positives) out of all actual positive instances. It focuses on the ability of the model to identify all positive instances.

Results

We used SolidiFI-benchmark as the testing dataset, and the comparison results of the metrics of the three models in Lightning Cat are shown in Table 5.

Clearly, the performance of Optimized-CodeBERT is the best among all models (as shown in Table 5), with the highest scores in all metrics. Its F1-score is 30.48% higher than that of Optimized-LSTM and 22.91% higher than that of Optimized-CNN.

In addition, we obtained the metrics results of the three models for four types of vulnerabilities, as shown in Table 6.

From Table 6, it can be seen that among the four types of vulnerabilities, Optimized-CodeBERT has the best detection performance for Timestamp-Dependency and Unhandled-Exceptions. However, for the Re-entrancy vulnerability, the Recall of Optimized-CodeBERT is lower than that of Optimized-LSTM and Optimized-CNN. When detecting the tx.origin vulnerability, Optimized-CodeBERT has higher Accuracy, Recall, and F1 than the other two models, while its Precision is 0.07% lower than that of Optimized-CNN. The three models use the same training set, but their detection performance differs because they have different modeling and generalization capabilities. Overall, Optimized-CodeBERT has better detection performance.

We obtained the vulnerability detection results of different methods in the SolidiFI-benchmark testing dataset, and the true positive results detected by each method are shown in Table 7. ”NA” indicates vulnerabilities that cannot be identified by the corresponding method.

Table 7 displays the vulnerability detection results for different types of vulnerabilities. ”Injected bugs” represents the actual quantity of vulnerabilities, and ”tp” (true positive) represents the number of detected vulnerabilities. From Table 7, it can be seen that among these auditing tools (Manticore, Mythril, Oyente, Security, Slither), Slither detected the most actual vulnerabilities. In terms of the detection results of all methods, Slither, Optimized-LSTM, and Optimized-CNN detected the most true positive for the Re-entrancy vulnerability, while Optimized-CodeBERT detected the most vulnerabilities for the three types of vulnerabilities (Timestamp_Dependency, Unhandle_Exceptions, tx.origin).

To better compare the detection performance of different methods, we will only compare the methods that can detect the four types of vulnerabilities. The comparison results of the Recall of different methods are shown in Fig. 8.

Figure 8 illustrates the comparison of Recall results among different methods, measuring the classification models’ capability to accurately identify true positive samples. The figure compares the recall rates of six methods, namely Mythril, Smartcheck, Slither, Optimized-CodeBERT, Optimized-LSTM, and Optimized-CNN. The findings indicate that the Optimized-CodeBERT model exhibits the highest recall at 93.55%, surpassing Slither by 11.85%. This highlights the Optimized-CodeBERT model’s exceptional accuracy and reliability in detecting and identifying true positive samples. Conversely, the Optimized-LSTM and Optimized-CNN models demonstrate relatively lower recall rates of 64.06% and 71.36%, respectively, suggesting potential challenges or limitations in recognizing true positive samples.

Based on the insights gained from Fig. 8, it is evident that the Optimized-CodeBERT method excels in recall, displaying superior proficiency in identifying true positive samples. These findings offer valuable guidance for model selection and practical applications.

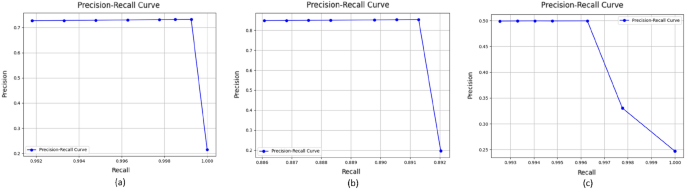

In order to comprehensively compare the performances of three models, we set different thresholds (i.e. 0.1, 0.2, 0.3, 0.4, 0.6, 0.7, 0.8, 0.9) for three models. The precision and recall values under these thresholds and precision-recall curves of three models are presented in the Fig. 9. The precision-recall curve is created by plotting precision values on the y-axis and recall values on the x-axis. Each point on the curve represents a different threshold used for classifying the positive class. Thresholds refer to the probability thresholds used by the classification model to determine which class an instance belongs to. According to the result shown in the Fig. 9, Optimised -CodeBERT presents a better performance in our tasks.

为了全面比较三种型号的性能,我们为三种型号设置了不同的阈值(即0.1、0.2、0.3、0.4、0.6、0.7、0.8、0.9)。图9显示了三种模型在这些阈值下的精确率和召回率值以及精确召回率曲线。通过在 y 轴上绘制精度值,在 x 轴上绘制召回率值,可以创建精确召回率曲线。曲线上的每个点都表示用于对正类进行分类的不同阈值。阈值是指分类模型用于确定实例属于哪个类的概率阈值。根据图 9 所示的结果,优化的 -CodeBERT 在我们的任务中表现出更好的性能。

We further conduct McNemmar test48 to compare the accuracy differences between the Optimised-CodeBERT model and the Optimsed-LSTM (shown in Table 8) as well as Optimised-CNN models (shown in Table 9). Optimised-CodeBERT presents extreme significance in accuracy difference with both Optimised-LSTM and Optimised-CNN in terms of vulnerabilities classes Re-entrancy, Timestamp-Dependency and Unhandled-Exceptions but little significance with these two models in terms of vulnerability class tx.origin. We speculate that this is because the features of the tx.origin vulnerability are relatively conspicuous, making it easier for the model to recognize and classify.

我们进一步进行McNemmar测试 48 ,以比较Optimised-CodeBERT模型与Optimsed-LSTM(如表8所示)以及Optimised-CNN模型(如表9所示)之间的精度差异。Optimised-CodeBERT在漏洞类别Re-entrancy、Timestamp-Dependency和Unhandled-Exceptions方面与Optimised-LSTM和Optimised-CNN在准确性差异方面表现出极大的意义,但在漏洞类别tx.origin方面,这两个模型的意义不大。我们推测这是因为tx.origin漏洞的特征比较明显,使得模型更容易识别和分类。

表 8 McNemmar 检验(优化 CodeBERT 和优化 LSTM 之间的精度差异)。

表 9 McNemmar 检验(优化 CodeBERT 和优化 CNN 之间的准确性差异)。

Discussion and future work

讨论和今后的工作

- Q1:

Scope of Vulnerability Detection and limitation.

漏洞检测和限制的范围。

In this research, we introduce a solution named ”Lightning Cat”. For the purpose of comparison with static analysis tools, we particularly focus on detecting four specific types of smart contract vulnerabilities: Re-entrancy, Timestamp-Dependency, Unhandled-Exceptions, and tx.origin. Through our comparative analysis, we observe instances where the static analysis tools fail to detect certain vulnerabilities. Our experimental results indicate that our proposed Optimized-CodeBERT outperforms the static detection tools mentioned in this paper, both in terms of recall and overall TP. At present, the Lightning Cat is equipped to detect these four categories of vulnerabilities.

在这项研究中,我们介绍了一种名为“闪电猫”的解决方案。为了与静态分析工具进行比较,我们特别关注检测四种特定类型的智能合约漏洞:Re-entrancy、Timestamp-Dependency、Unhandled-Exceptions 和 tx.origin。通过对比分析,我们观察到静态分析工具无法检测到某些漏洞的情况。实验结果表明,我们提出的Optimized-CodeBERT在召回率和整体TP方面都优于本文提到的静态检测工具。目前,闪电猫具备检测这四类漏洞的能力。

While the proposed solution, which captures vulnerability feature information by extracting vulnerability code functions, has shown promising results in scenarios with text length constraints, we also acknowledge limitations in our current approach when addressing cross-code and cross-function vulnerabilities. The code interactions and contextual information related to these vulnerabilities might span multiple functions or modules, making them challenging to be captured by our current method. In the future, We aim to expand the detection capabilities of our model. Additionally, there’s potential to extend the application of Lightning Cat to other vulnerability code detection scenarios.

虽然所提出的解决方案通过提取漏洞代码函数来捕获漏洞特征信息,在文本长度受限的场景中显示出有希望的结果,但我们也承认我们目前的方法在解决跨代码和跨功能漏洞时存在局限性。与这些漏洞相关的代码交互和上下文信息可能跨越多个函数或模块,这使得我们当前的方法难以捕获它们。未来,我们的目标是扩展我们模型的检测能力。此外,Lightning Cat 的应用有可能扩展到其他漏洞代码检测场景。

- Q2:

Expanding Lightning Cat to Other Areas of Code Vulnerability Detection.

将 Lightning Cat 扩展到代码漏洞检测的其他领域。

Lightning Cat plans to expand its vulnerability detection capabilities. Using examples of vulnerabilities such as Buffer Overflow, Null Pointer Dereference, and Logic Errors, here’s how the expansion process unfolds:

Lightning Cat 计划扩展其漏洞检测功能。使用缓冲区溢出、空指针取消引用和逻辑错误等漏洞的示例,以下是扩展过程的展开方式:

Acquiring datasets containing code with these vulnerability features is the first step, which can be obtained from open-source code repositories or proprietary databases. Code mutation techniques may also be considered for data augmentation. During data preprocessing, CodeBERT will be used to convert code into fixed-length vectors suitable for the model’s input format. Subsequently, the model undergoes fine-tuning through transfer learning, with iterative adjustments to hyperparameters based on dataset characteristics to enhance performance. Validation using appropriate vulnerability test sets ensures model accuracy and robustness. Finally, to address newly emerging vulnerabilities, Lightning Cat periodically collects new vulnerability data and updates model parameters to effectively respond to these new issues.

第一步是获取包含具有这些漏洞特征的代码的数据集,可以从开源代码存储库或专有数据库中获取。代码突变技术也可以考虑用于数据增强。在数据预处理期间,CodeBERT 将用于将代码转换为适合模型输入格式的固定长度向量。随后,通过迁移学习对模型进行微调,并根据数据集特征对超参数进行迭代调整以提高性能。使用适当的漏洞测试集进行验证可确保模型的准确性和鲁棒性。最后,为了解决新出现的漏洞,闪电猫会定期收集新的漏洞数据并更新模型参数,以有效应对这些新问题。

- Q3:

What if malicious actors use this technology to discover vulnerabilities for illicit gain?

如果恶意行为者使用此技术来发现漏洞以获取非法利益怎么办?

Automatic vulnerability detection technology holds immense potential in enhancing the security of smart contracts. However, it is a double-edged sword. On the one hand, it can assist developers in swiftly identifying and rectifying vulnerabilities in software, thereby elevating its security. On the other hand, if such technology falls into the hands of malicious actors, they might exploit it to uncover undisclosed vulnerabilities and launch attacks. To address this, proactive measures are essential.

自动漏洞检测技术在增强智能合约的安全性方面具有巨大潜力。然而,这是一把双刃剑。一方面,它可以帮助开发人员快速识别和纠正软件中的漏洞,从而提升其安全性。另一方面,如果此类技术落入恶意行为者手中,他们可能会利用它来发现未公开的漏洞并发起攻击。为了解决这个问题,必须采取积极主动的措施。

Developers should regularly conduct code audits and undergo secure coding training as well as adopt responsible vulnerability disclosure policies. It’s encouraged that researchers and developers, upon discovering security vulnerabilities, initially notify the relevant organizations or individuals privately. This provides them ample time for rectification before the information is made public. Concurrently, regular updates and maintenance of software and dependency libraries are crucial to ensure known security vulnerabilities which are addressed collaboratively. While it might reduce the use of the tool, considerations could be made to impose certain restrictions on open-source automatic detection tools, such as limiting the use of advanced features or requiring user authentication. Undoubtedly, enhancing the security of smart contracts requires collective effort. The open-source community should foster collaboration among its members. This can be achieved by sharing best security practices, tools, and resources.

开发人员应定期进行代码审计并接受安全编码培训,并采用负责任的漏洞披露政策。我们鼓励研究人员和开发人员在发现安全漏洞后,首先私下通知相关组织或个人。这为他们在信息公开之前提供了充足的纠正时间。同时,软件和依赖库的定期更新和维护对于确保以协作方式解决已知的安全漏洞至关重要。虽然它可能会减少该工具的使用,但可以考虑对开源自动检测工具施加某些限制,例如限制使用高级功能或要求用户身份验证。毫无疑问,增强智能合约的安全性需要集体努力。开源社区应该促进其成员之间的合作。这可以通过共享最佳安全实践、工具和资源来实现。

Conclusion 结论

This paper introduces Lightning Cat, which uses deep learning methods to detect vulnerabilities in smart contracts, including three models: Optimized-CodeBERT, Optimized-LSTM, and Optimized-CNN. Based on experimental results, the Optimized-CodeBERT model achieved the best overall performance. We optimized and compared three models, and found that Optimized-CodeBERT achieved the best results in evaluation metrics such as Accuracy, Precision, and F1-score. This research utilized the CodeBERT pre-trained model for data preprocessing, which improved the ability of code semantic analysis. In data preprocessing, we extracted problem code segments functions, which not only considered the key features of smart contract vulnerability code but also solved the length limitation problem of deep learning for processing long texts. This approach avoids issues such as unclear features due to excessively long texts or overfitting due to excessively short texts, thereby improving the model’s performance. The results show that the proposed method has more reasonable data preprocessing and model optimization, resulting in better detection performance.

本文介绍了 Lightning Cat,它使用深度学习方法来检测智能合约中的漏洞,包括三种模型:Optimized-CodeBERT、Optimized-LSTM 和 Optimized-CNN。根据实验结果,Optimized-CodeBERT模型取得了最佳的整体性能。我们优化对比了三个模型,发现 Optimized-CodeBERT 在准确率、精度和 F1 分数等评估指标上取得了最好的结果。本研究利用CodeBERT预训练模型进行数据预处理,提高了代码语义分析能力。在数据预处理中,我们提取了问题代码段函数,既考虑了智能合约漏洞代码的关键特征,又解决了深度学习处理长文本的长度限制问题。这种方法避免了由于文本过长而导致的特征不清晰或由于文本过短而导致的过度拟合等问题,从而提高了模型的性能。结果表明,所提方法具有更合理的数据预处理和模型优化,检测性能更好。

This paper analyzed the detection performance of each type of vulnerability and found that the Optimized-CodeBERT model outperformed Slither, Optimized-LSTM, and Optimized-CNN in detecting three types of vulnerabilities, but was inferior in one type. This is because different models have different structures, parameters, and learning algorithms, which affect their modeling and generalization abilities. Therefore, in future work, we aim to improve the performance of the three models in Lightning Cat and extend the application of our proposed Lightning Cat to more code security fields beyond smart contract vulnerabilities detection (Supplementary Information).

该文分析了各类漏洞的检测性能,发现Optimized-CodeBERT模型在检测3类漏洞方面优于Slither、Optimized-LSTM和Optimized-CNN,但在1类漏洞中表现较差。这是因为不同的模型具有不同的结构、参数和学习算法,这会影响它们的建模和泛化能力。因此,在未来的工作中,我们的目标是提高 Lightning Cat 中三种模型的性能,并将我们提出的 Lightning Cat 的应用扩展到智能合约漏洞检测之外的更多代码安全领域(补充信息)。

Data availability 数据可用性

The datasets generated and/or analyzed during the current study are available from the corresponding author upon reasonable request.

在当前研究期间生成和/或分析的数据集可根据合理要求从通讯作者处获得。

References 引用

-

Aggarwal, S. & Kumar, N. Cryptographic consensus mechanisms. In Advances in Computers Vol. 121 (eds Aggarwal, S. & Kumar, N.) 211–226 (Elsevier, 2021).

Aggarwal, S. & Kumar, N. 加密共识机制。In Advances in Computers Vol. 121 (eds Aggarwal, S. & Kumar, N.) 211–226 (Elsevier, 2021)。 -

Sunyaev, A. Distributed Ledger Technology (Springer International Publishing, 2020).

Sunyaev, A. 分布式账本技术(施普林格国际出版社,2020 年)。 -

Zou, W. et al. Smart contract development: Challenges and opportunities. IEEE Trans. Software Eng. 47, 2084–2106. https://doi.org/10.1109/TSE.2019.2942301 (2021).

Zou, W. 等人.智能合约开发:挑战与机遇。IEEE Trans. Software Eng. 47, 2084–2106.https://doi.org/10.1109/TSE.2019.2942301 (2021)。 -

Wang, W. et al. Smart contract token-based privacy-preserving access control system for industrial internet of things. Digit. Commun. Netw. 9, 337–346. https://doi.org/10.1016/j.dcan.2022.10.005 (2023).

Wang, W. 等人.基于智能合约令牌的工业物联网隐私保护门禁系统。数字。通讯。净。9, 337–346.https://doi.org/10.1016/j.dcan.2022.10.005 (2023)。 -

The dao smart contract. https://etherscan.io/address/0xbb9bc244d798123fde783fcc1c72d3bb8c189413 (2016).

dao 智能合约。https://etherscan.io/address/0xbb9bc244d798123fde783fcc1c72d3bb8c189413(2016 年)。 -

The bectoken smart contract. https://etherscan.io/address/0xc5d105e63711398af9bbff092d4b6769c82f793d (2018).

bectoken智能合约。https://etherscan.io/address/0xc5d105e63711398af9bbff092d4b6769c82f793d(2018 年)。 -

The bzrxtoken smart contract. https://etherscan.io/address/0x56d811088235F11C8920698a204A5010a788f4b3 (2020).

bzrxtoken智能合约。https://etherscan.io/address/0x56d811088235F11C8920698a204A5010a788f4b3(2020 年)。 -

Kalra, S., Goel, S., Dhawan, M. & Sharma, S. Zeus: analyzing safety of smart contracts. In Ndss, 1–12 (2018).

Kalra, S., Goel, S., Dhawan, M. & Sharma, S. Zeus:分析智能合约的安全性。在 Ndss,1-12 (2018)。 -

Jiang, B., Liu, Y. & Chan, W. K. Contractfuzzer: Fuzzing smart contracts for vulnerability detection. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, 259–269 (2018).

Jiang, B., Liu, Y. & Chan, W. K. Contractfuzzer:用于漏洞检测的模糊测试智能合约。第 33 届 ACM/IEEE 自动化软件工程国际会议论文集,259–269 (2018)。 -

Park, D., Zhang, Y., Saxena, M., Daian, P. & Roşu, G. A formal verification tool for ethereum vm bytecode. In Proceedings of the 2018 26th ACM joint meeting on European software engineering conference and symposium on the foundations of software engineering, 912–915 (2018).

Park, D., Zhang, Y., Saxena, M., Daian, P. & Roşu, G.以太坊 vm 字节码的形式化验证工具。2018 年第 26 届 ACM 欧洲软件工程会议和软件工程基础研讨会联席会议论文集,912–915 (2018)。 -

Luu, L., Chu, D.-H., Olickel, H., Saxena, P. & Hobor, A. Making smart contracts smarter. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, 254–269 (2016).

Luu, L., Chu, D.-H., Olickel, H., Saxena, P. & Hobor, A. 让智能合约更智能。2016 年 ACM SIGSAC 计算机和通信安全会议论文集,254–269 (2016)。 -

Ruskin, L. Mythril# 8. Mythril 2, 1 (1980).

拉斯金,L. 秘银# 8。秘银 2, 1 (1980)。 -

Tsankov, P. et al. Securify: Practical security analysis of smart contracts. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, 67–82 (2018).

Tsankov, P. et al. Securify:智能合约的实用安全分析。2018 年 ACM SIGSAC 计算机和通信安全会议论文集,67–82 (2018)。 -

Feist, J., Grieco, G. & Groce, A. Slither: A static analysis framework for smart contracts. In 2019 IEEE/ACM 2nd International Workshop on Emerging Trends in Software Engineering for Blockchain (WETSEB) (eds Feist, J. et al.) 8–15 (IEEE, 2019).

Feist, J., Grieco, G. & Groce, A. Slither:智能合约的静态分析框架。2019 年 IEEE/ACM 第二届区块链软件工程新兴趋势国际研讨会 (WETSEB) (eds Feist, J. et al.) 8–15 (IEEE, 2019)。 -

Tikhomirov, S. et al. Smartcheck: Static analysis of ethereum smart contracts. In Proceedings of the 1st international workshop on emerging trends in software engineering for blockchain, 9–16 (2018).

Tikhomirov, S. et al. Smartcheck:以太坊智能合约的静态分析。第一届区块链软件工程新兴趋势国际研讨会论文集,9-16(2018)。 -

Chen, L. Z. W. C. W. Z. J. C. Z. Z. C. H. Cbgru: A detection method of smart contract vulnerability based on a hybrid model. Sensors22 (2022).

Chen, L. Z. W. C. W. Z. J. C. Z. Z. C. H. Cbgru:一种基于混合模型的智能合约漏洞检测方法。传感器22(2022)。 -

Liu, Z., Jiang, M., Zhang, S., Zhang, J. & Liu, Y. A smart contract vulnerability detection mechanism based on deep learning and expert rules. IEEE Access (2023).

Liu, Z., Jiang, M., Zhang, S., Zhang, J. & Liu, Y.基于深度学习和专家规则的智能合约漏洞检测机制。IEEE访问(2023)。 -

Zhang, L. et al. A novel smart contract vulnerability detection method based on information graph and ensemble learning. Sensorshttps://doi.org/10.3390/s22093581 (2022).

Zhang, L. 等.一种基于信息图和集成学习的智能合约漏洞检测方法。传感器 https://doi.org/10.3390/s22093581(2022 年)。 -

Zhang, L. et al. Spcbig-ec: A robust serial hybrid model for smart contract vulnerability detection. Sensorshttps://doi.org/10.3390/s22124621 (2022).

Zhang, L. et al. Spcbig-ec:用于智能合约漏洞检测的鲁棒串行混合模型。传感器 https://doi.org/10.3390/s22124621(2022 年)。 -

Huang, T. H.-D. Hunting the ethereum smart contract: Color-inspired inspection of potential attacks. arXiv preprint arXiv:1807.01868 (2018).

黄东华寻找以太坊智能合约:对潜在攻击进行颜色启发的检查。arXiv 预印本 arXiv:1807.01868 (2018)。 -

Liao, J.-W., Tsai, T.-T., He, C.-K. & Tien, C.-W. Soliaudit: Smart contract vulnerability assessment based on machine learning and fuzz testing. In 2019 Sixth International Conference on Internet of Things: Systems, Management and Security (IOTSMS) (ed. Liao, J.-W.) 458–465 (IEEE, 2019).

廖建伟, 蔡, T.-T., 何志强& Tien, C.-W.Soliaudit:基于机器学习和模糊测试的智能合约漏洞评估。2019 年第六届物联网国际会议:系统、管理与安全 (IOTSMS) (ed. Liao, J.-W.) 458–465 (IEEE, 2019)。 -

Yu, X., Zhao, H., Hou, B., Ying, Z. & Wu, B. Deescvhunter: A deep learning-based framework for smart contract vulnerability detection. In 2021 International Joint Conference on Neural Networks (IJCNN) (eds Yu, X. et al.) 1–8 (IEEE, 2021).

Yu, X., Zhao, H., Hou, B., Ying, Z. & Wu, B. Deescvhunter:基于深度学习的智能合约漏洞检测框架。2021 年国际神经网络联合会议 (IJCNN) (eds Yu, X. et al.) 1–8 (IEEE, 2021)。 -

Feng, Z. et al. Codebert: A pre-trained model for programming and natural languages. arXiv preprint arXiv:2002.08155 (2020).

Feng, Z. et al. Codebert:用于编程和自然语言的预训练模型。arXiv 预印本 arXiv:2002.08155 (2020)。 -

Salloum, S. A., Khan, R. & Shaalan, K. A survey of semantic analysis approaches. In Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020) (eds Salloum, S. A. et al.) 61–70 (Springer, 2020).

Salloum, S. A., Khan, R. & Shaalan, K.语义分析方法综述。在人工智能和计算机视觉国际会议论文集 (AICV2020) (eds Salloum, S. A. et al.) 61–70 (Springer, 2020)。 -

Al-Boghdady, A., El-Ramly, M. & Wassif, K. Idetect for vulnerability detection in internet of things operating systems using machine learning. Sci. Rep. 12, 17086 (2022).

Al-Boghdady, A., El-Ramly, M. & Wassif, K. Idetect,用于使用机器学习进行物联网操作系统中的漏洞检测。Sci. Rep. 12, 17086 (2022)。 -

Nadkarni, P. M., Ohno-Machado, L. & Chapman, W. W. Natural language processing: An introduction. J. Am. Med. Inform. Assoc. 18, 544–551 (2011).

Nadkarni, P. M., Ohno-Machado, L. & Chapman, W. W. 自然语言处理:简介。J. Am. Med. 通知。Assoc. 18, 544–551 (2011)。 -

Papineni, K., Roukos, S., Ward, T. & Zhu, W.-J. Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, 311–318 (2002).

Papineni, K., Roukos, S., Ward, T. & Zhu, W.-J.Bleu:一种自动评估机器翻译的方法。计算语言学协会第 40 届年会论文集,311–318 (2002)。 -

Sutskever, I., Vinyals, O. & Le, Q. V. Sequence to sequence learning with neural networks. Adv. Neural Inform. Process. Syst.27 (2014).

Sutskever, I., Vinyals, O. & Le, Q. V. 使用神经网络进行序列到序列学习。高级神经信息。过程。系统27(2014)。 -

Liu, Y. et al. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692 (2019).

Liu, Y. et al. Roberta:一种稳健优化的 bert 预训练方法。arXiv 预印本 arXiv:1907.11692 (2019)。 -

Gao, Z. When deep learning meets smart contracts. In Proceedings of the 35th IEEE/ACM International Conference on Automated Software Engineering, ASE ’20, 1400-1402, https://doi.org/10.1145/3324884.3418918 (Association for Computing Machinery, New York, NY, USA, 2021).

高志强当深度学习与智能合约相遇时。在第 35 届 IEEE/ACM 自动化软件工程国际会议论文集,ASE ’20, 1400-1402, https://doi.org/10.1145/3324884.3418918(计算机协会,美国纽约州纽约市,2021 年)。 -

Alvarez, J. L. H., Ravanbakhsh, M. & Demir, B. S2-cgan: Self-supervised adversarial representation learning for binary change detection in multispectral images. In IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium (ed. Alvarez, J. L. H.) 2515–2518 (IEEE, 2020).

Alvarez, J. L. H., Ravanbakhsh, M. & Demir, B. S2-cgan:用于多光谱图像中二元变化检测的自监督对抗表示学习。在 IGARSS 2020–2020 IEEE 国际地球科学与遥感研讨会(Alvarez, J. L. H. 编辑)2515–2518 (IEEE, 2020)。 -

Liao, S. & Grishman, R. Using document level cross-event inference to improve event extraction. In Proceedings of the 48th annual meeting of the association for computational linguistics, 789–797 (2010).

Liao, S. & Grishman, R. 使用文档级跨事件推理来改进事件提取。计算语言学协会第 48 届年会论文集,789–797 (2010)。 -

Zhang, Y. et al. Delesmell: Code smell detection based on deep learning and latent semantic analysis. Knowl.-Based Syst. 255, 109737 (2022).

Zhang, Y. et al. Delesmell:基于深度学习和潜在语义分析的代码气味检测。基于知识的系统 255, 109737 (2022)。 -

Yuan, X., Lin, G., Tai, Y. & Zhang, J. Deep neural embedding for software vulnerability discovery: Comparison and optimization. Secur. Commun. Netw. 2022, 1–12 (2022).

Yuan, X., Lin, G., Tai, Y. & Zhang, J. 用于软件漏洞发现的深度神经嵌入:比较和优化。安全。通讯。净。2022, 1–12 (2022). -

Church, K. W. Word2vec. Nat. Lang. Eng. 23, 155–162 (2017).

教堂,KW Word2vec。Nat. Lang. Eng. 23, 155–162 (2017). -

Joulin, A. et al. Fasttext. zip: Compressing text classification models. arXiv preprint arXiv:1612.03651 (2016).

Joulin, A. 等人 Fasttext.zip:压缩文本分类模型。arXiv 预印本 arXiv:1612.03651 (2016)。 -

Pennington, J., Socher, R. & Manning, C. D. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 1532–1543 (2014).

Pennington, J., Socher, R. & Manning, C. D. Glove:单词表示的全局向量。在 2014 年自然语言处理经验方法会议 (EMNLP) 的论文集,1532–1543 (2014) 中。 -

Xu, A., Dai, T., Chen, H., Ming, Z. & Li, W. Vulnerability detection for source code using contextual lstm. In 2018 5th International Conference on Systems and Informatics (ICSAI), 1225–1230, https://doi.org/10.1109/ICSAI.2018.8599360 (2018).

Xu, A., Dai, T., Chen, H., Ming, Z. & Li, W. 使用上下文 lstm 对源代码进行漏洞检测。2018 年第 5 届系统与信息学国际会议 (ICSAI),1225–1230,https://doi.org/10.1109/ICSAI.2018.8599360 (2018)。 -

Rossini, M. Slither audited smart contracts dataset. https://huggingface.co/datasets/mwritescode/slither-audited-smart-contracts (2022).

Rossini, M. Slither 审核了智能合约数据集。https://huggingface.co/datasets/mwritescode/slither-audited-smart-contracts (2022)。 -

Smartbugs-wild. https://github.com/smartbugs/smartbugs-wild (2019).

聪明虫-野生。https://github.com/smartbugs/smartbugs-wild(2019 年)。 -

Ghaleb, A. & Pattabiraman, K. How effective are smart contract analysis tools? evaluating smart contract static analysis tools using bug injection. In Proceedings of the 29th ACM SIGSOFT International Symposium on Software Testing and Analysis (2020).

Ghaleb, A. 和 Pattabiraman, K.智能合约分析工具的有效性如何?使用错误注入评估智能合约静态分析工具。在第 29 届 ACM SIGSOFT 软件测试与分析国际研讨会论文集(2020 年)中。 -

Hofstätter, S., Zamani, H., Mitra, B., Craswell, N. & Hanbury, A. Local self-attention over long text for efficient document retrieval. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, 2021–2024 (2020).

Hofstätter, S., Zamani, H., Mitra, B., Craswell, N. & Hanbury, A. 对长文本的局部自我关注,以实现高效的文档检索。第 43 届国际 ACM SIGIR 信息检索研究与开发会议论文集,2021-2024 (2020)。 -

Huang, Z., Xu, W. & Yu, K. Bidirectional lstm-crf models for sequence tagging. arXiv preprint arXiv:1508.01991 (2015).

Huang, Z., Xu, W. & Yu, K. 用于序列标记的双向 lstm-crf 模型。arXiv 预印本 arXiv:1508.01991 (2015)。 -

Sherstinsky, A. Fundamentals of recurrent neural network (rnn) and long short-term memory (lstm) network. Physica D 404, 132306 (2020).

循环神经网络 (rnn) 和长短期记忆 (lstm) 网络的基础知识。Physica D 404, 132306 (2020)。 -

Malek, S., Melgani, F. & Bazi, Y. One-dimensional convolutional neural networks for spectroscopic signal regression. J. Chemom. 32, e2977 (2018).

Malek, S., Melgani, F. & Bazi, Y. 用于光谱信号回归的一维卷积神经网络。J.化学。32, E2977 (2018年)。 -

Harbola, S. & Coors, V. One dimensional convolutional neural network architectures for wind prediction. Energy Convers. Manag. 195, 70–75 (2019).

Harbola, S. & Coors, V.用于风预测的一维卷积神经网络架构。能量转换器。管理。195, 70–75 (2019). -

Tato, A. & Nkambou, R. Improving adam optimizer. https://openreview.net/forum?id=HJfpZq1DM (2018).

Tato, A. & Nkambou, R. 改进 adam 优化器。https://openreview.net/forum?id=HJfpZq1DM(2018 年)。 -

Lachenbruch, P. A. Mcnemar test. Wiley StatsRef: Statistics Reference Online (2014).

Lachenbruch, PA Mcnemar 测试。Wiley StatsRef:在线统计参考(2014 年)。

原文始发于Xueyan Tang, Yuying Du, Alan Lai, Ze Zhang & Lingzhi Shi :Deep learning-based solution for smart contract vulnerabilities detection

转载请注明:Deep learning-based solution for smart contract vulnerabilities detection | CTF导航